Qilang Ye (叶启朗)

Research AssistantCollege of Computer Science, Nankai University Email: rikeilong[AT]gmail.com [Google Scholar] [GitHub] |

|

About Me

I am currently a PhD candidate at Nankai University. My research interests include Multimodal Learning, and Multimodal Large Language Models. Recently, I am working on alignment learning to optimize LLMs outputs, e.g. audio-visual hallucinations, ambiguity , etc.

Publications (* co-first authors)

|

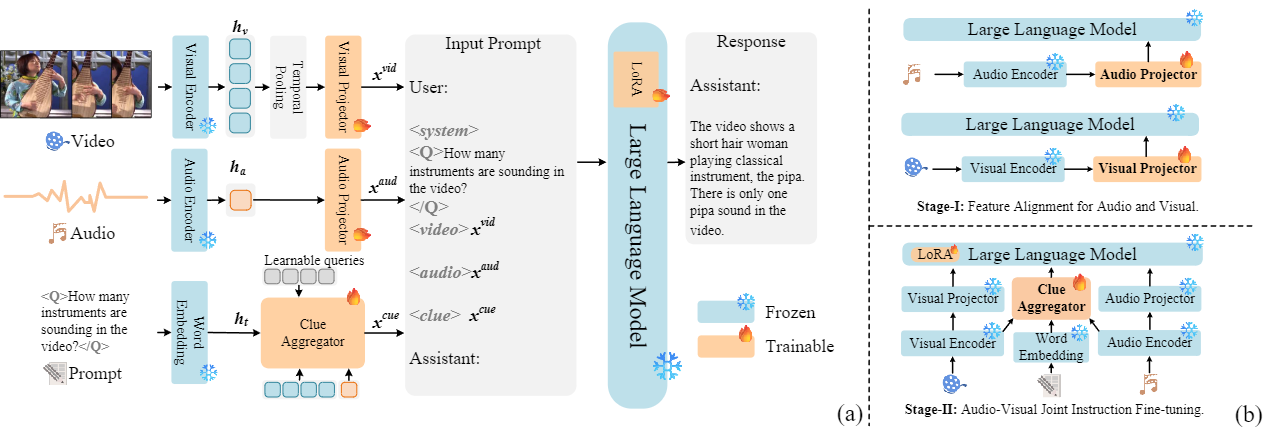

CAT: Enhancing Multimodal Large Language Model to Answer Questions in Dynamic Audio-Visual Scenarios Qilang Ye, Zitong Yu, Rui Shao, Xinyu Xie, Philip Torr, Xiaochun CaoEuropean Conference on Computer Vision (ECCV), 2024. [paper] [Code] |

| | |

|

|

CAT+: Investigating and Enhancing Audio-visual Understanding in Large Language Models Qilang Ye, Zitong Yu, Xin LiuIEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025.3582389 [paper] |

| | |

|

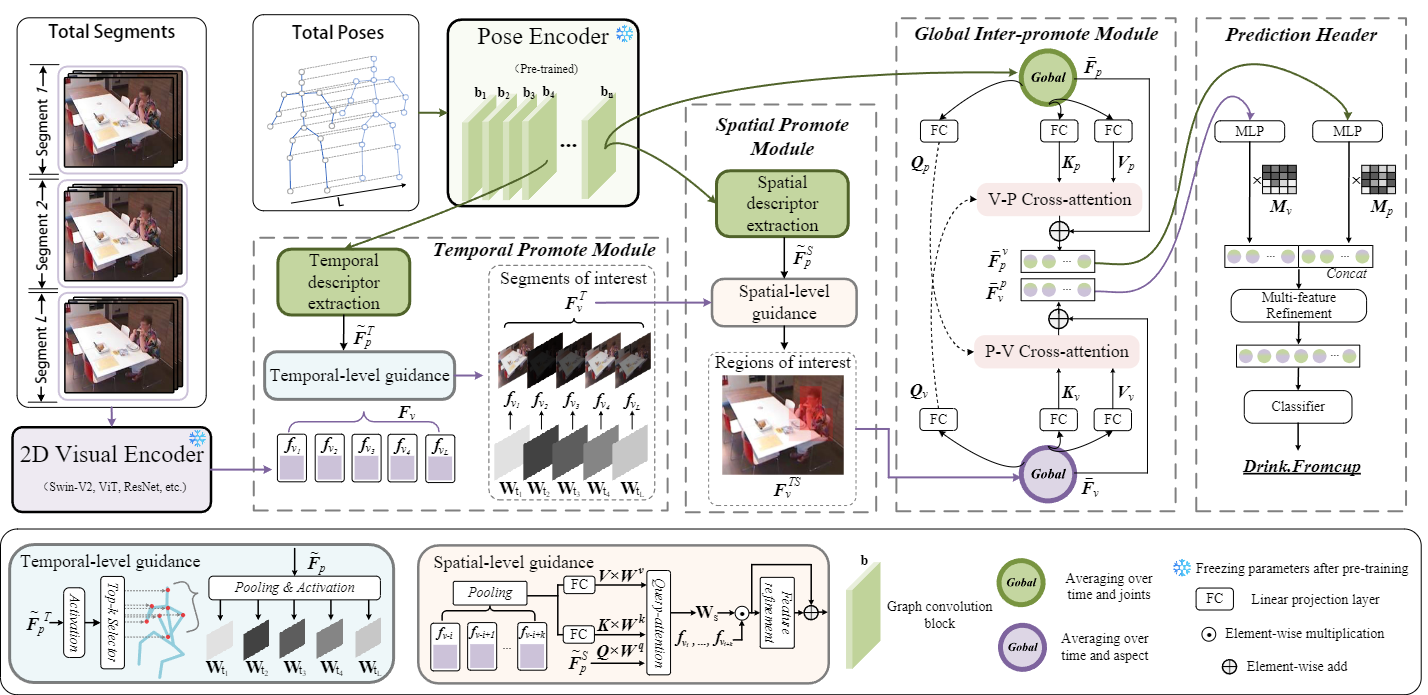

Pose-promote: Progressive Visual Perception for Activities of Daily Living Qilang Ye, Zitong YuIEEE Signal Processing Letters (IEEE SPL) [paper] [Code] |

| | |

|

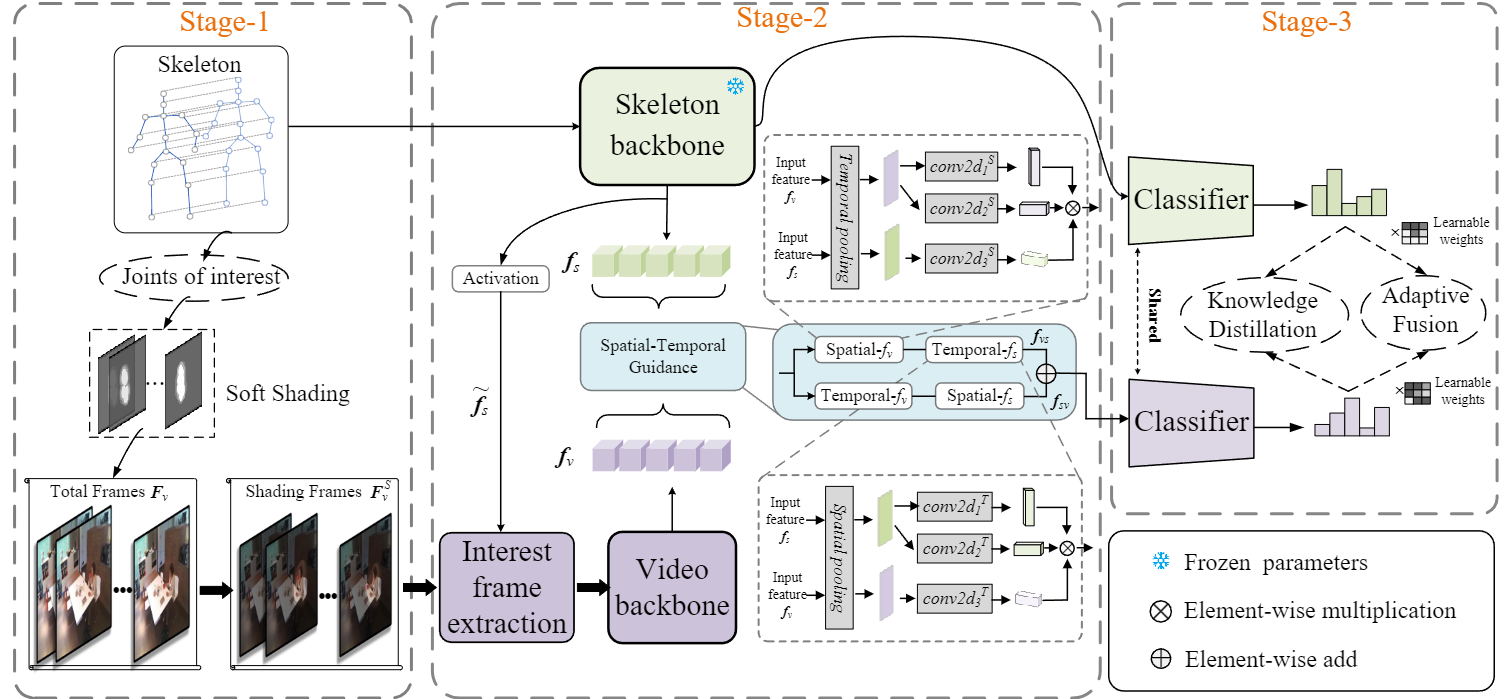

3sG: Three-stage Guidance for Indoor Human Action Recognition Hai Nan*, Qilang Ye*, Zitong Yu, Kang AnIET Image Processing [paper] [Code] |

| | |

|

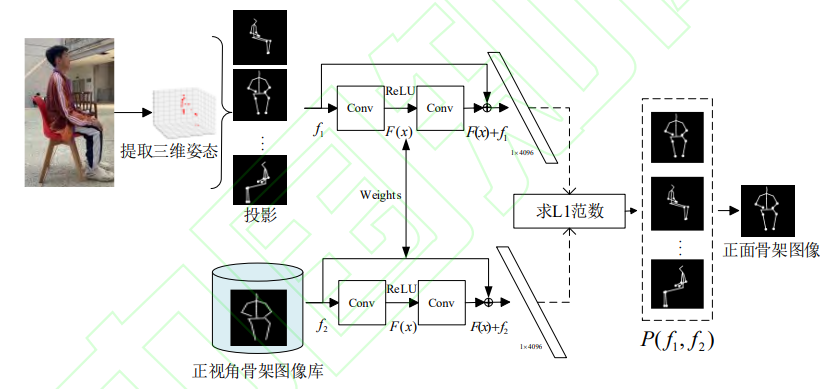

一种基于人体骨架的任意角度坐姿识别方法 Qilang Ye, Hai Nan, Daixin Li中文核心 [paper] |

| |